Today, we're introducing our new weblog. We've been busy lately studying the recent amazing advents in Artificial Intelligence. We are preparing to launch our own chat.tensorvoice.org endpoint. We figured now is a good time to also migrate to a real blog engine instead of the flat file system we were using.

We'll be publishing regular updates on our own work as well as general news and "of interest" items on this site, rather than in other locations as we have in the past.

Come back soon for updates on the upcoming chat endpoint. We're currently working behind the scenes to have GPT-J running there. We're also experimenting with LLAMA and other models, but we prefer GPT-J. For anyone who wonders why we're starting with an 18-month old large language model when so much has changed in the past 18 months, including the release of larger models, the answer is simple:

GPT-J is a remarkable project

Even compared to newer and bigger models, this is the best designed for our current research purposes. More than any other large language model we've yet encountered, this came into being as a labor of love by very smart people with all the right principles in place. For example, The Pile used to train GPT-J was well-curated. We agree with all decisions made in developing it, and appreciate their detailed transparency in doing so. In comparison, the data pile behind LLAMA is pretty awesome too, but for some reason, that model tends to curse and lean more easily toward the dark tetrad side of the spectrum than the GPT-J family does. And although we like the Alpaca project, we wonder what it will look like if we use GPT-J as the core instead of LLAMA. In short, we've just not yet seen another AI project meet the standards set by the Eleutherai team for both quality and ethics.

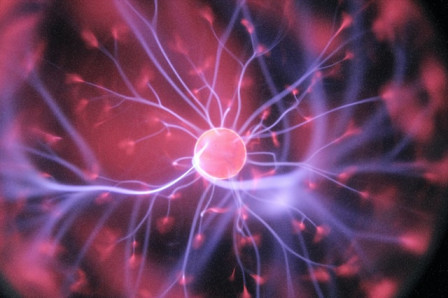

The well-informed reader will say: "Ok, then why not GPT-NeoX which was built by the same team with the same pile of data, but is larger and faster because it was designed to run on GPUs?" We think it's not about size, or, maybe a better way to say that is: It's about size. Due to its small size, GPT-J is fast, faster than other, larger, models. Yet it appears to be large enough to hold that beautiful ball of light burning at the center of the black box of transformer neural nets. One of our goals is building products that run on lightweight hardware. We see that this kind of constraint often results in more elegant solutions.

Along those lines, we are eagerly following the 4-bit quantization trend, which reduces the size of even very large language models, while losing virtually no functionality. We're excited with this kind of innovation, because now we're looking at a potential future of billions of decentralized devices, quite different from the future envisioned by Big Tech: centralized monopolies that entangle you with telemetry and surveil everything you're doing with their platform so they can manipulate you into buying stuff with the "more is more" model.

For now, we want to see how far we can go with the "less is more" approach.